PyTorch深度学习快速入门教程

1.环境安装

环境安装参考[2]第一节,可以基本解决可能遇到的环境安装问题

2.Pytorch数据加载

重写Dataset函数里的__getitem__()和__len__()函数

#!/usr/bin/venv python

# -*- coding:utf-8 -*-

# @Author :LiJie

# @Time :2024/7/12 上午12:34

# @Filename :test.py

from torch.utils.data import Dataset, DataLoader

from PIL import Image

import os

class MyData(Dataset):

def __init__(self, root_dir, label_dir):

self.root_dir = root_dir

self.label_dir = label_dir

self.path = os.path.join(self.root_dir, self.label_dir)

self.img_path = os.listdir(self.path)

def __getitem__(self, idx):

img_name = self.img_path[idx]

img_item_path = os.path.join(self.path, img_name)

img = Image.open(img_item_path)

label = self.label_dir

return img, label

def __len__(self):

return len(self.img_path)

root_dir = "dataset/train"

ants_label_dir = "ants"

ants_dataset = MyData(root_dir, ants_label_dir)

img, label = ants_dataset[0]

img.show()3.TensorBoard使用

3.1 TensorBoard安装以及可能遇到的问题

TensorBoard可以将代码运行过程可视化,是一个非常不错的辅助工具

TensorBoard没有默认安装,在conda虚拟环境中输入安装指令

pip install tensorboard在终端运行Tensorboard

# --logdir指定日志目录

tensorboard --logdir=logs

# --port 可以指定端口(默认端口6006)

tensorboard --logdir=logs --port==6007在使用Tensorboard,可能会遇到numpy版本过高问题,将numpy降级即可

问题显示情况: “AttributeError: np.string_ was removed in the NumPy 2.0 release. Use `np.bytes”

pip uninstalll numpy

pip install numpy==1.23.0其他可能出现的问题:

tensorboard出现TensorFlow installation not found - running with reduced feature set

解决办法,改用绝对路径

tensorboard --logdir="D:\PycharmProjects\PyTorch\another_practice\CIFAR10"3.2 TensorBoard使用

演示代码

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter("logs")

# writer.add_image()

# y = 2x

for i in range(100):

writer.add_scalar("y=2x", 2*i, i) #此函数一般使用三个参数:标题title y轴 x轴

writer.close()此处=会遇到另一个问题,title没改改变x轴和y轴参数,图像会被写到一个图片里,tensorboard在拟合函数图像时会导致图片混乱,决绝办法是将日志文件夹的文件删除

from torch.utils.tensorboard import SummaryWriter

import numpy as np

from PIL import Image

img_path = "data/train/bees_image/16838648_415acd9e3f.jpg"

img_PIL = Image.open(img_path)

img_array = np.array(img_PIL)

print(img_array.shape)

writer = SummaryWriter("logs")

writer.add_image("test", img_array, 2, dataformats='HWC') #注意img_array代表格式,以及dataformats默认格式为'CHW'

# y = 2x

for i in range(100):

writer.add_scalar("y=2x", 2*i, i)

writer.close()4.Transformer的使用

Transforms是一个深度学习类函数包

常用的有:transforms.ToTensor() 将数据转为tensor类型

transforms.Normalize() 数据标准化

transforms.Resize( sequnce or int ) 大小转换

transforms.Compose([transforms变量1,transforms变量2, ... ]) 前一变量输出作为下一变量输入

# 常见的Transforms

from torchvision import transforms

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter('logs')

img_path = "data/train/ants_image/0013035.jpg"

img = Image.open(img_path)

# ToTensor的使用

trans_totensr = transforms.ToTensor()

img_tensor = trans_totensr(img)

writer.add_image("ToTensor", img_tensor)

# Normalize

print(img_tensor.shape)

print(img_tensor[0][0][0])

trans_norm = transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5])

img_norm = trans_norm(img_tensor)

print(img_norm[0][0][0])

writer.add_image("Normalize", img_norm)

# Resize

print(img.size)

trans_resize = transforms.Resize((512, 512))

# img PIL -> resize -> img_resize PIL

img_resize = trans_resize(img)

# img_resize PIL -> ToTensor -> img_resize tensor

img_resize_totensor = trans_totensr(img_resize)

writer.add_image("Resize", img_resize_totensor, 0)

print(img_resize)

# Compose - resize - 2 另一种Resize的方法

trans_resize_2 = transforms.Resize(512)

trans_compose = transforms.Compose([trans_resize, transforms.ToTensor()])

img_resize_2 = trans_compose(img)

writer.add_image("Resize", img_resize_2, 1)

writer.close()5.torchvision数据集

可以从函数声明找到下载链接,在迅雷下载数据集,将压缩包下载到root目录,download依然设置为True,代码运行时会自动解压并verify

import torchvision

from torch.utils.tensorboard import SummaryWriter

from torchvision.transforms import transforms

dataset_transform = transforms.Compose([

transforms.ToTensor(),

])

train_set = torchvision.datasets.CIFAR10(root='./data2', train=True, transform=dataset_transform, download=True)

test_set = torchvision.datasets.CIFAR10(root='./data2', train=False, transform=dataset_transform, download=True)

writer = SummaryWriter(log_dir='p11')

for i in range(10):

img, target = test_set[i]

writer.add_image('test_set', img, i)

writer.close()

6.Dataloader使用

从dataset中取数据

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_set = torchvision.datasets.CIFAR10(root='./data2', train=False, transform=torchvision.transforms.ToTensor())

# shuffle为true时,每次循环的抽取次序不一样,false则一样

# drop_last是除以batch_size后剩余的数据,是否保存,False保存,True不保存

# num_workers 多线程

test_loader = DataLoader(dataset=test_set, batch_size=64, shuffle=True, num_workers=0, drop_last=False)

print(test_loader)

img, target = test_set[0]

print(img.shape)

print(target)

i = 0

writer = SummaryWriter(log_dir='dataloader')

step = 0

for data in test_loader:

i += 1

print(f"==========={i}===================")

imgs, targets = data

# print(imgs.shape)

# print(targets.shape)

writer.add_images('test_set', imgs, step)

step += 1

writer.close()7.nn.Module的使用

eg.

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

x = F.relu(self.conv1(x))

return F.relu(self.conv2(x))

8.nn_conv卷积函数

torch.nn.functional.conv2d(input, weight, bias=None, stride=1, padding=0, dilation=1, groups=1)

input为一个四维的tensor数据:input – input tensor of shape (minibatch,in_channels,𝑖𝐻,𝑖𝑊)(minibatch,in_channels,*i*H,iW)

import torch.nn.functional as F

import torch

input = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]])

kernal = torch.tensor([[1, 2, 1],

[0, 1, 0],

[2, 1, 0]])

# print(input.shape)

# print(kernal.shape)

input = torch.reshape(input, [1, 1, 5, 5])

kernal = torch.reshape(kernal, [1, 1, 3, 3])

output = F.conv2d(input, kernal, stride=1, padding=0)

print(output)

output2 = F.conv2d(input, kernal, stride=2, padding=0)

print(output2)9.nn_Conv2d

注意输入输出层之间的HWC的计算

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10(root='../data2', train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = Conv2d(3, 6, kernel_size=3, stride=1, padding=0)

def forward(self, x):

x = self.conv1(x)

return x

tudui = Tudui()

print(tudui)

for data in dataloader:

imgs, targets = data

output = tudui(imgs)

print(output.shape)

10.最大池化 MaxPool2d

减少数据同时保留特征;注意ceil=True,池化时即使核无法完全覆盖,也正常取值

import torch

import torchvision

from torch import nn

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10(root='../data2', train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=64)

input = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]], dtype=torch.float32)

input = torch.reshape(input, (-1, 1, 5, 5))

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3, ceil_mode=False)

def forward(self, input):

output = self.maxpool1(input)

return output

tudui = Tudui()

# output = tudui(input)

# print(output)

writer = SummaryWriter(log_dir='../logs_maxpool')

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images('input', imgs, step)

output = tudui(imgs)

writer.add_images("output", output, step)

step += 1

writer.close()

-

Input: (𝑁,𝐶𝑖𝑛,𝐻𝑖𝑛,𝑊𝑖𝑛)(N,*Cin,Hin,Win) or (𝐶𝑖𝑛,𝐻𝑖𝑛,𝑊𝑖𝑛)(Cin,Hin,Win*)

-

Output: (𝑁,𝐶𝑜𝑢𝑡,𝐻𝑜𝑢𝑡,𝑊𝑜𝑢𝑡)(N,*Cout,Hout,Wout) or (𝐶𝑜𝑢𝑡,𝐻𝑜𝑢𝑡,𝑊𝑜𝑢𝑡)(Cout,Hout,Wout*), where

𝐻𝑜𝑢𝑡=⌊𝐻𝑖𝑛+2×padding[0]−dilation[0]×(kernel_size[0]−1)−1stride[0]+1⌋*Hout=⌊stride[0]Hin*+2×padding[0]−dilation[0]×(kernel_size[0]−1)−1+1⌋

𝑊𝑜𝑢𝑡=⌊𝑊𝑖𝑛+2×padding1−dilation1×(kernel_size1−1)−1stride1+1⌋*Wout=⌊stride1Win*+2×padding1−dilation1×(kernel_size1−1)−1+1⌋

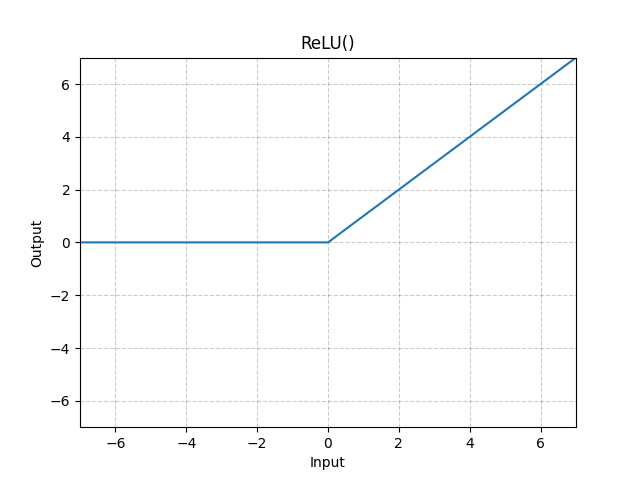

11.非线性激活

nn.ReLu() inplace 参数,为True时会将输入的值做相应改变,默认为False

$$

ReLu(x)=(x)^+max(0,x)

$$

import torch

import torch.nn as nn

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10(root='../data2', train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

# self.relu = nn.ReLU()

self.sigmoid = nn.Sigmoid()

def forward(self, input):

output = self.sigmoid(input)

return output

tudui = Tudui()

writer = SummaryWriter(log_dir='../logs_sigmod')

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images('input', imgs, step)

output = tudui(imgs)

writer.add_images("output_sigmoid", output, step)

step += 1

writer.close()12.线性层(torch.nn.Linear)

线性层又叫全连接层,其中每个神经元与上一层所有神经元相连,一个简单的线性层如下图所示:

线性函数为:torch.nn.Linear(in_features, out_features, bias=True, device=None, dtype=None),其中重要的3个参数in_features、out_features、bias说明如下:

in_features:每个输入(x)样本的特征的大小

out_features:每个输出(y)样本的特征的大小

bias:如果设置为False,则图层不会学习附加偏差。默认值是True,表示增加学习偏置。

在上图中,in_features=d,out_features=L。

作用可以是缩小一维的数据长度

下面代码中注意drop_last设置为true,否则会报错。

torch.flatten展平,可用torch.view(),torch.reshape(-1)代替

import torch

import torchvision

from torch import nn

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10(root='../data2', train=False, transform=torchvision.transforms.ToTensor(),

download=True)

img_data = DataLoader(dataset=dataset, batch_size=64, drop_last=True)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.linear1 = nn.Linear(in_features=196608, out_features=10)

def forward(self, x):

x = self.linear1(x)

return x

tudui = Tudui()

for data in img_data:

imgs, targets = data

print(imgs.shape)

imgs_flatten = torch.flatten(imgs)

print(imgs_flatten.shape)

linear_out = tudui(imgs_flatten)

print(linear_out.shape)

13.Sequntial

Sequntial将网络各部分包装成一个model

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Linear, Flatten

from torch.utils.tensorboard import SummaryWriter

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = nn.Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

tudui = Tudui()

print(tudui)

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)

writer = SummaryWriter('../logs_seq')

writer.add_graph(tudui, input)

writer.close()

14.损失函数与反向传播

loss test

"""

@Author: LiJie

@FileName: nn_loss.py

@DateTime: 2024/7/15 下午7:33

"""

from torch.nn import L1Loss, MSELoss, CrossEntropyLoss

import torch

x1 = torch.tensor([1, 2, 3], dtype=torch.float32)

y1 = torch.tensor([1, 2, 5], dtype=torch.float32)

# L1loss

loss1 = L1Loss()

loss_l1 = loss1(x1, y1)

print(f"loss_l1 = {loss_l1}")

# MSELoss

loss2 = MSELoss()

loss_mse = loss2(x1, y1)

print(f"loss_mse = {loss_mse}")

# CrossEntryLoss

loss3 = CrossEntropyLoss()

x2 = torch.tensor([0.1, 0.2, 0.3], dtype=torch.float32)

print(x2.shape)

x2 = torch.reshape(x2, [1, 3])

y2 = torch.tensor([1])

loss_crossrentry = loss3(x2, y2)

print(f"loss_crossrentry = {loss_crossrentry}")loss + backword

"""

@Author: LiJie

@FileName: nn_network_loss.py

@DateTime: 2024/7/15 下午8:19

"""

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Linear, Flatten

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10(root='../data2', train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = nn.Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

tudui = Tudui()

step = 1

for data in dataloader:

imgs, targets = data

output = tudui(imgs)

result_loss = loss(output, targets)

result_loss.backward()

print(step)

step += 1

15.优化器 torch.optim

1、过程描述

继上节的计算损失函数和反向传播,

之后便是根据损失值,利用优化器进行梯度更新,然后不断降低loss的过程

一般要对数据集扫描多遍,进行参数的多次更新,才能得到一个较好的效果。

注意,每次更新后要将梯度置0,然后重新计算梯度注意,每次更新后要将梯度置0,然后重新计算梯度

2、常用优化器:

优化器的种类比较多,常用的就是随机梯度下降(SGD) 等

不同的优化器的参数列表一般不同,但都会有 params(模型的参数列表)和lr(学习率)参数,

eg.

"""

@Author: LiJie

@FileName: nn_optim.py

@DateTime: 2024/7/15 下午8:50

"""

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Linear, Flatten

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10(root='../data2', train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = nn.Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

tudui = Tudui()

optim = torch.optim.SGD(tudui.parameters(), lr=0.01)

step = 1

for epoch in range(10):

loss_sum = 0.0

for data in dataloader:

imgs, targets = data

output = tudui(imgs)

result_loss = loss(output, targets)

optim.zero_grad()

result_loss.backward()

optim.step()

loss_sum += result_loss

print('epoch:', epoch, 'loss:', loss_sum)16.VGG16

使用torchvision.models.vgg16模型,新版vgg16用weights代替pretrain参数,weights=None代表没有预训练,另外参数有weights='IMAGENET1K_V1' 或者 weights='IMAGENET1K_FEATRUES' (这里出现一个问题,官方文档说DEFAULT等价于IMAGENET1K_V1,但是代码文件介绍写DEFALUT等于没有预训练,实际运行代码说明官方文档是对的。。。。)

模型下载可能会很慢,下载时会显示下载链接,可复制到迅雷下载,再放到下载目录(下载目录运行的时候会显示)下载目录为: C:\Users\用户名.cache\torch\hub\checkpoints

然后是vgg预训练模型下载位置的修改,待填坑

vgg模型的结构修改,也叫模型迁移,三种修改,代码:

"""

@Author: LiJie

@FileName: nn_VGG16.py

@DateTime: 2024/7/16 下午3:18

"""

import torchvision

from torch import nn

vgg16_false = torchvision.models.vgg16(weights=None)

vgg16_true = torchvision.models.vgg16(weights='IMAGENET1K_V1')

print("vgg16原始模型结构")

print(vgg16_false)

print(vgg16_true)

# 在最外层添加一个线性层

print("vgg16在最外层添加一个线性层")

vgg16_true.add_module('add_linear', nn.Linear(in_features=1000, out_features=10))

print(vgg16_true)

# 在classifier添加一个线性层

print("vgg16在classifier添加一个线性层")

vgg16_false.classifier.add_module('add_linear', nn.Linear(in_features=1000, out_features=10))

print(vgg16_false)

# 将classifier的第6层修改

print("vgg16 classifier第6层修改")

vgg16_true.classifier[6] = nn.Linear(in_features=1000, out_features=10)

print(vgg16_true)

17.模型的保存与读取

module_save.py

"""

@Author: LiJie

@FileName: module_save.py

@DateTime: 2024/7/16 下午4:28

"""

import torch

import torchvision

from torch import nn

vgg16 = torchvision.models.vgg16(weights=None)

# 保存方式1,模型结构+参数

torch.save(vgg16, "vgg16_method1.pth")

# 保存方式2,模型参数(官方推荐)

torch.save(vgg16.state_dict(), "vgg16_method2.pth")

# 方式1的陷阱

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = nn.Conv2d(3, 64, kernel_size=3, padding=1)

def forward(self, x):

x = self.conv1(x)

return x

tudui = Tudui()

torch.save(tudui, "Tudui_method1.pth")module_load.py

使用方式1保存自己的模型,再加载时,需要import源代码,否则会报错

"""

@Author: LiJie

@FileName: module_load.py

@DateTime: 2024/7/16 下午4:31

"""

import torch

import torchvision

from module_save import *

# 方式1,加载模型

model1 = torch.load("vgg16_method1.pth")

print(model1)

# 方式2,加载模型

vgg16 = torchvision.models.vgg16(weights='IMAGENET1K_V1')

vgg16.load_state_dict(torch.load("vgg16_method2.pth"))

print(vgg16)

# 方式1 存在的陷阱

model_tudui = torch.load("tudui_method1.pth")

print(model_tudui)

18.GPU训练模型

CPU训

-

准备数据集

-

用DataLoader加载数据

-

创建网络模型

-

设置损失函数

-

设置优化器

-

开始训练

-

model.train()

-

训练步骤

模型输出

优化器优化:梯度归零;反向传播;optimizer.step();

-

测试步骤 with torch.no_grade():

计算loss和accuracy accuracy = (outputs.argmax(1) == targets).sum()

-

code eg.

model.py

"""

@Author: LiJie

@FileName: model.py

@DateTime: 2024/7/16 下午7:23

"""

import torch

from torch import nn

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, kernel_size=5, stride=1, padding=2),

nn.MaxPool2d(2, stride=2),

nn.Conv2d(32, 32, kernel_size=5, stride=1, padding=2),

nn.MaxPool2d(2, stride=2),

nn.Conv2d(32, 64, kernel_size=5, stride=1, padding=2),

nn.MaxPool2d(2, stride=2),

nn.Flatten(),

nn.Linear(64 * 4 * 4, 64),

nn.Linear(64, 10),

)

def forward(self, x):

x = self.model(x)

return x

if __name__ == '__main__':

tudui = Tudui()

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)

train.py

"""

@Author: LiJie

@FileName: train.py

@DateTime: 2024/7/16 下午7:21

"""

import torch

from torch import nn

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from model import *

# 数据集

train_set = torchvision.datasets.CIFAR10(root='../data2', train=True, download=True,

transform=torchvision.transforms.ToTensor())

test_set = torchvision.datasets.CIFAR10(root='../data2', train=False, download=True,

transform=torchvision.transforms.ToTensor())

# 数据集长度

train_data_len = len(train_set)

test_data_len = len(test_set)

print("训练数据集长度:{}".format(train_data_len))

print("测试数据集长度:{}".format(test_data_len))

# DataLoader加载数据集

train_dataloader = DataLoader(train_set, batch_size=64, shuffle=True)

test_dataloader = DataLoader(test_set, batch_size=64, shuffle=True)

# 创建网络模型

tudui = Tudui()

# 损失函数

loss_fn = nn.CrossEntropyLoss()

# 优化器

learning_rate = 0.01 # 学习率

optimizer = torch.optim.SGD(tudui.parameters(), lr=learning_rate)

# 训练参数设置

total_train_step = 0 # 训练次数记录

total_test_step = 0 # 测试次数记录

epochs = 10 # 训练轮数

# 添加TensorBoard,观察loss和accuracy

writer = SummaryWriter('../logs_train')

# 开始训练

for epoch in range(epochs):

print("==========epoch:{}==========".format(epoch))

# 训练步骤

tudui.train()

for data in train_dataloader:

imgs, targets = data

outputs = tudui(imgs)

loss = loss_fn(outputs, targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step += 1

if total_train_step % 100 == 0:

print("训练次数:{},Loss:{}".format(total_train_step, loss.item()))

writer.add_scalar('train_loss', loss.item(), total_train_step)

# 测试步骤

tudui.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

outputs = tudui(imgs)

loss = loss_fn(outputs, targets)

total_test_loss += loss.item()

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy += accuracy.item()

print("测试集的Loss:{}".format(total_test_loss))

print("测试集的正确率:{}".format(total_accuracy/test_data_len))

writer.add_scalar('test_loss', total_test_loss, total_test_step)

writer.add_scalar('test_accuracy', total_accuracy/test_data_len, total_test_step)

total_test_step += 1

torch.save(tudui, "tudui_{}.pth".format(epoch))

print("model saved")

writer.close()用GPU训练

方法一:

可加速对象:

网络模型;

loss function;

训练时的数据和测试时的数据。

if torch.cuda.is_available():

zz = zzz.cuda()测试代码:

"""

@Author: LiJie

@FileName: train.py

@DateTime: 2024/7/16 下午7:21

"""

import torch

from torch import nn

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

import time

from model import *

# 数据集

train_set = torchvision.datasets.CIFAR10(root='../data2', train=True, download=True,

transform=torchvision.transforms.ToTensor())

test_set = torchvision.datasets.CIFAR10(root='../data2', train=False, download=True,

transform=torchvision.transforms.ToTensor())

# 数据集长度

train_data_len = len(train_set)

test_data_len = len(test_set)

print("训练数据集长度:{}".format(train_data_len))

print("测试数据集长度:{}".format(test_data_len))

# DataLoader加载数据集

train_dataloader = DataLoader(train_set, batch_size=64, shuffle=True)

test_dataloader = DataLoader(test_set, batch_size=64, shuffle=True)

# 创建网络模型

tudui = Tudui()

if torch.cuda.is_available():

tudui = tudui.cuda()

# 损失函数

loss_fn = nn.CrossEntropyLoss()

if torch.cuda.is_available():

loss_fn = loss_fn.cuda()

# 优化器

learning_rate = 0.01 # 学习率

optimizer = torch.optim.SGD(tudui.parameters(), lr=learning_rate)

# 训练参数设置

total_train_step = 0 # 训练次数记录

total_test_step = 0 # 测试次数记录

epochs = 10 # 训练轮数

# 添加TensorBoard,观察loss和accuracy

# writer = SummaryWriter('../logs_train')

start_time = time.time()

# 开始训练

for epoch in range(epochs):

print("==========epoch:{}==========".format(epoch))

# 训练步骤

tudui.train()

for data in train_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs = imgs.cuda()

targets = targets.cuda()

outputs = tudui(imgs)

loss = loss_fn(outputs, targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step += 1

end_time = time.time()

if total_train_step % 100 == 0:

print("训练次数:{},Loss:{}".format(total_train_step, loss.item()))

print("训练时间:{}".format(end_time - start_time))

# writer.add_scalar('train_loss', loss.item(), total_train_step)

# 测试步骤

tudui.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs = imgs.cuda()

targets = targets.cuda()

outputs = tudui(imgs)

loss = loss_fn(outputs, targets)

total_test_loss += loss.item()

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy += accuracy.item()

print("测试集的Loss:{}".format(total_test_loss))

print("测试集的正确率:{}".format(total_accuracy / test_data_len))

# writer.add_scalar('test_loss', total_test_loss, total_test_step)

# writer.add_scalar('test_accuracy', total_accuracy / test_data_len, total_test_step)

total_test_step += 1

# torch.save(tudui, "tudui_{}.pth".format(epoch))

# print("model saved")

# writer.close()

方法二:

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

zzz = zzz.to(device)测试代码:

"""

@Author: LiJie

@FileName: train_gpu2.py

@DateTime: 2024/7/16 下午8:23

"""

"""

@Author: LiJie

@FileName: train.py

@DateTime: 2024/7/16 下午7:21

"""

import torch

from torch import nn

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from model import *

# 定义训练设备

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 数据集

train_set = torchvision.datasets.CIFAR10(root='../data2', train=True, download=True,

transform=torchvision.transforms.ToTensor())

test_set = torchvision.datasets.CIFAR10(root='../data2', train=False, download=True,

transform=torchvision.transforms.ToTensor())

# 数据集长度

train_data_len = len(train_set)

test_data_len = len(test_set)

print("训练数据集长度:{}".format(train_data_len))

print("测试数据集长度:{}".format(test_data_len))

# DataLoader加载数据集

train_dataloader = DataLoader(train_set, batch_size=64, shuffle=True)

test_dataloader = DataLoader(test_set, batch_size=64, shuffle=True)

# 创建网络模型

tudui = Tudui()

tudui = tudui.to(device)

# 损失函数

loss_fn = nn.CrossEntropyLoss()

loss_fn = loss_fn.to(device)

# 优化器

learning_rate = 0.01 # 学习率

optimizer = torch.optim.SGD(tudui.parameters(), lr=learning_rate)

# 训练参数设置

total_train_step = 0 # 训练次数记录

total_test_step = 0 # 测试次数记录

epochs = 10 # 训练轮数

# 添加TensorBoard,观察loss和accuracy

# writer = SummaryWriter('../logs_train')

# 开始训练

for epoch in range(epochs):

print("==========epoch:{}==========".format(epoch))

# 训练步骤

tudui.train()

for data in train_dataloader:

imgs, targets = data

imgs, targets = imgs.to(device), targets.to(device)

outputs = tudui(imgs)

loss = loss_fn(outputs, targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step += 1

if total_train_step % 100 == 0:

print("训练次数:{},Loss:{}".format(total_train_step, loss.item()))

# writer.add_scalar('train_loss', loss.item(), total_train_step)

# 测试步骤

tudui.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

imgs, targets = imgs.to(device), targets.to(device)

outputs = tudui(imgs)

loss = loss_fn(outputs, targets)

total_test_loss += loss.item()

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy += accuracy.item()

print("测试集的Loss:{}".format(total_test_loss))

print("测试集的正确率:{}".format(total_accuracy/test_data_len))

# writer.add_scalar('test_loss', total_test_loss, total_test_step)

# writer.add_scalar('test_accuracy', total_accuracy/test_data_len, total_test_step)

total_test_step += 1

torch.save(tudui, "tudui_{}.pth".format(epoch))

print("model saved")

# writer.close()______to be update